Abstract

Flaky tests hinder the development process by exhibiting uncertain behavior in regression testing. A flaky test may pass in some runs and fail in others while running on the same code version. The non-deterministic outcome frequently misleads the developers into debugging non-existent faults in the code. To effectively debug the flaky tests, developers need to reproduce them. The industry de facto to reproduce flaky tests is to rerun them multiple times. However, rerunning a flaky test numerous times is time and resource-consuming.

This work presents a technique for rapidly and reliably reproducing timing-dependent GUI flaky tests, acknowledged as the most common type of flaky tests in Android apps. Our insight is that flakiness in such tests often stems from event racing on GUI data. Given stack traces of a failure, our technique employs dynamic analysis to infer event races likely leading to the failure and reproduces it by selectively delaying only relevant events involved in these races. Thus, our technique can efficiently reproduce a failure within minimal test runs. The experiments conducted on 80 timing-dependent flaky tests collected from 22 widely-used Android apps show our technique is efficient in flaky test failure reproduction. Out of the 80 flaky tests, our technique could successfully reproduce 73 within 1.71 test runs on average. Notably, it exhibited extremely high reliability by consistently reproducing the failure for 20 runs.

Dataset

To evaluate our tool, we reproduced 80 timing-dependent flaky tests in 22 real-world Android apps. The following table shows the detailed information of these flaky test cases and Apps, where "Stars" characterizes the app popularity in terms of stars on GitHub, "Version" denotes the version number of app we used in our study, "Context" describes the purpose of the test case. The detail of each test can be found by clicking on the "Test Name" with a hyperlink.

| TestID | Test Name | App Name | Stars | Version | Context |

|---|

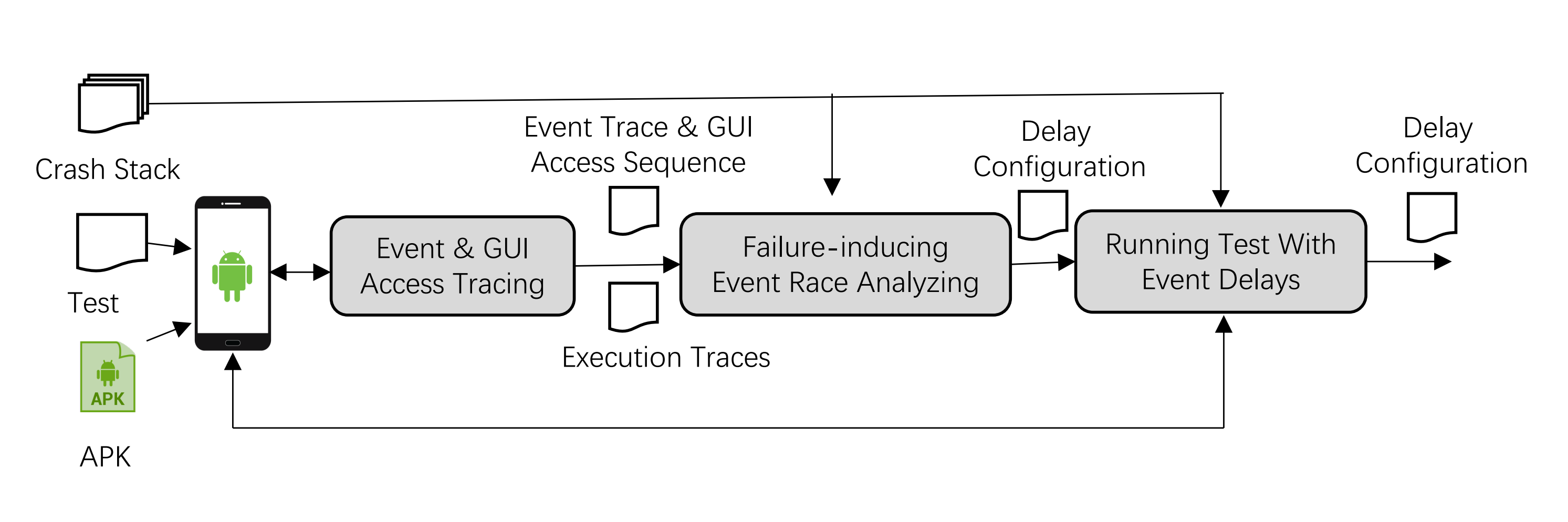

Workflow

The workflow of FlakReaper is depicted in the following figure. FlakReaper takes the app under test and the test case as input to perform a dynamic analysis. During the analysis, FlakReaper monitors and records events during execution and GUI access operations. Besides, it captures the execution traces of a test run. Afterward, the collected runtime data and crash stacks are analyzed to identify event races that could have caused the failure. Subsequently, FlakReaper runs the test by enforcing an order among the events involved in the race to manifest the failure. To establish an order between two events, FlakReaper delays one event to allow the other to execute first. If the test run successfully reproduces the observed flaky behavior, FlakReaper provides the delay configuration as output.

Operation Guidance

-

Prerequisites

- Android emulator with API 30 with EdXposed Framework installed

- Android Studio (Hedgehog | 2023.1.1 Canary 10) with YAMP plugin installed

- Java 17 & Gradle 8.0 & Android Gradle Plugin 8.0.0

-

Usage

- Install the app module and the timelord module of FlakeEcho to an Android emulator with API 30 with EdXposed Framework installed. You may check if the app module and the timelord module are correctly installed in the EdXposedManager module list.

- To trace the messageQueue and the GUI related operations during an instrumentation test, make sure the control switch of the app module on and the timelord module off in the EdXposedManager.

- Run the instrumentation test in the Android Studio with YAMP plugin on to record the runtime method call stack with an output of .trace file. The log of GUI related operations should be generated under the /sdcard directory in the emulator with the format of {timestamp}.txt.

- Run the Analyzer module with the input of the .trace file and the {timestamp}.txt. It will generate a static_id_list.txt file under the /sdcard directory in the emulator, which contains the static id list.

- To inject a single delay in the instrumentation test, make sure the control switch of the app module off and the timelord module on in the EdXposedManager. Then run the instrumentation test again and the delay will be injected to reproduce the failure of flaky test.

Experiment Results

-

Effectiveness Study

TestID Test Name Total Number of Events Number of Event Race Rerun Times to Reproduce Failures Total Time to Reproduce Failures(s) Execution Time to Trace Methods and Stacktrace(s) Execution Time without FlakReaper(s) Overhead -

Entropy of Runtime Difference

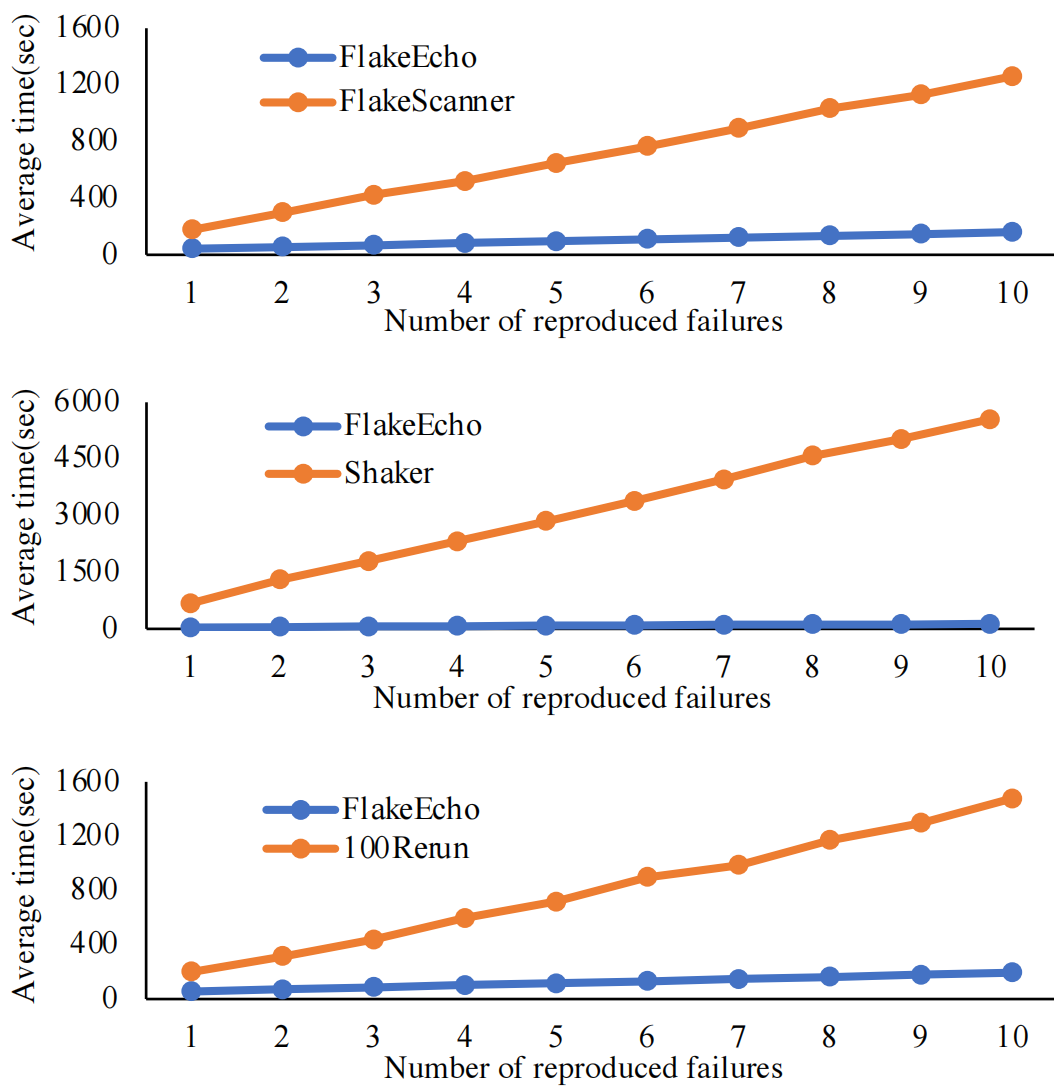

Our study compared the efficiency of failure reproduction by FlakeEcho against other tools. Despite all tools failing to reproduce every case, a fair comparison was made on shared test sets. Results showed that FlakeEcho generally reproduces failures more quickly and with fewer test runs, highlighting its effectiveness, especially beyond the second reproduction attempt.

The following tables present the statistics of the study, wherein the vertical columns correspond to our instrument, FlakeEcho, juxtaposed with tools such as FlakeScanner, Shaker, and 100Rerun. The horizontal rows are designated as the number of reproduced failures, with the content enumerating the average time taken, delineated in seconds.

Data contrasts FlakeEcho with FlakeScanner

Data contrasts FlakeEcho with Shaker

Data contrasts FlakeEcho with 100Rerun